Solutions

How Data Guided Phase 2 of Our Chatbot Project at UNSW

Jason Yang

Jason is a full stack developer, with a strong focus on working with the latest technologies such as Xamarin Mobile development and Azure Bot Service. He is also a custom web and desktop application developer. He contributes to innovative, groundbreaking software solutions that meet our business demand for agility, flexibility and mobility

September 26th, 2018

Earlier this year, we joined forces with Microsoft to develop a chatbot pilot project at the University of New South Wales’ School of Mechanical and Manufacturing Engineering.

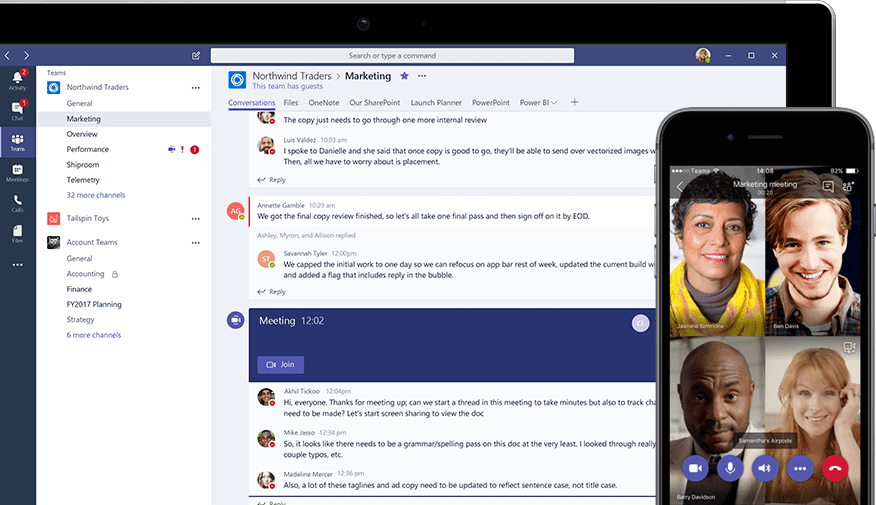

In the first phase, which we wrote about here, we designed and deployed a bot to gather and identify student questions within a course’s Microsoft Teams environment. These questions were forwarded to tutors and lecturers to answer.

After one semester, we looked at the data we’d collected, such as the types of questions students were asking, how they asked questions, and where they ran into difficulties.

Here’s a summary of what we learned, and how we used these inputs to guide the second phase of the project.

What we learned in phase one

The data from phase one revealed three key insights.

- Students asked a lot of questions about information that doesn’t change

We realised that most questions related to the course booklet and course in general (i.e. “When is the next assignment due?” or “Which tutorial group am I in?”). While the answers may vary from student to student, they usually don’t change over the course of a semester.

- We needed to make it easier for students to ask the bot questions, and to search for answers

Students sometimes forgot to tag the bot when asking a question, and would then attempt to tag the bot in a reply. This meant that the bot couldn’t identify or misidentified the question and missed the opportunity to pass it on to be answered.

Due to the nature of conversations within Microsoft Teams, students also reported difficulties searching for questions that had already been asked and/or answered.

- Extra functionality in specific areas would add tremendous value

The data revealed some obvious areas to add new functionality. These included:

- Giving the bot the ability to learn how to answer questions

- Allowing the bot to attempt to answer questions based on past answers

- Creating a user interface to summarise questions asked

- Enabling users to filter and sort by question status (i.e. answered or unanswered questions) and topic.

From insight to action

We aimed to address these issues in phase two, which is was recently completed. We gave the bot four key new capabilities to deliver an improved user experience.

- One-on-one dynamic Q&A

We made it easier for students to find answers relevant to their individual circumstances. To do this, we developed a customised Q&A functionality.

This means the bot can provide contextual answers based on dynamic factors, such as who the student is, and on what day they are asking. It draws on information such as the student-tutorial mapping list, assessment and exam timetables and the course outline to answer these questions without input from university staff.

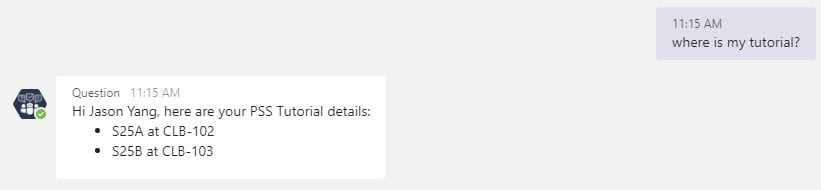

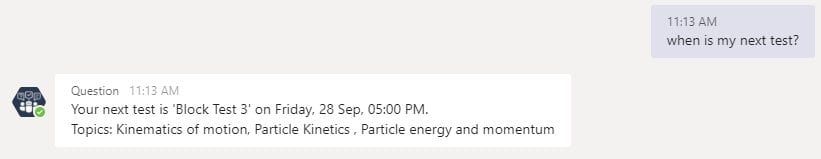

As you can see from the screenshots below, the bot now answers questions like:

- “When is my tutorial?”

- “What do I have to do this week?”

- “What are the topics for the next block test?”

The bot looks update and assessment information to inform students about upcoming tests.

The bot answers questions about individual students' tutorial groups, such as location and time.

Activities for the week are identified by looking up assessment, activity and date information.

By personalising the user experience for each student, the bot is more accessible and helpful. It can easily answer questions relevant to individual students’ circumstances.

- Improved search functionality and clearer dashboards

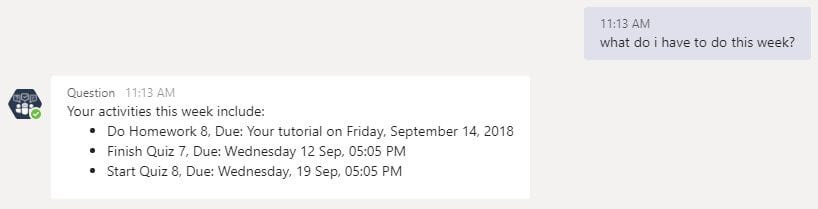

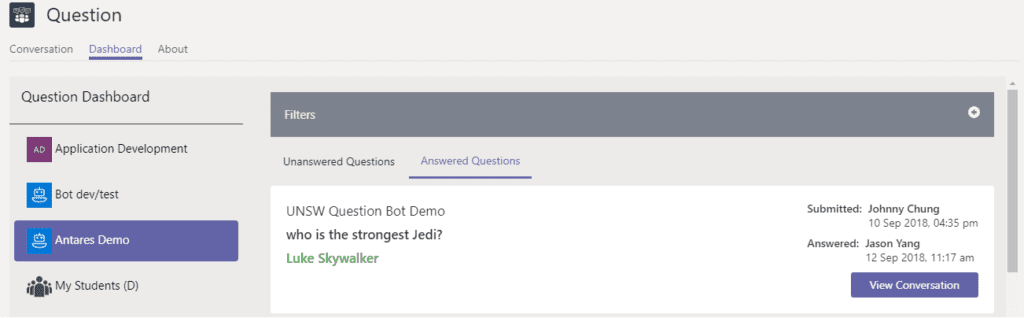

Next up, we made it easier for students to find the information they needed. We did this by building a questions dashboard tab for the course within Microsoft Teams, as shown in the below screenshot.

This tab is available in every channel in the course’s Microsoft Teams environment. Students can see which questions have been asked in each channel, and whether or not they have been answered.

We also built a personalised dashboard tab for each student. This tab shows a list of all course Teams they belong to, and the questions asked within that team.

Tutors and lecturers also have an extra “My Students” tab, which shows all the questions asked by students in their classes. This ensures questions aren’t missed and don’t get lost among other conversations.

- Helping the bot to learn from conversations

We knew the bot would deliver the most value if it learned from conversations and could provide answers without human input. To do this, we built an active channel learning workflow to teach the bot how to answer basic questions.

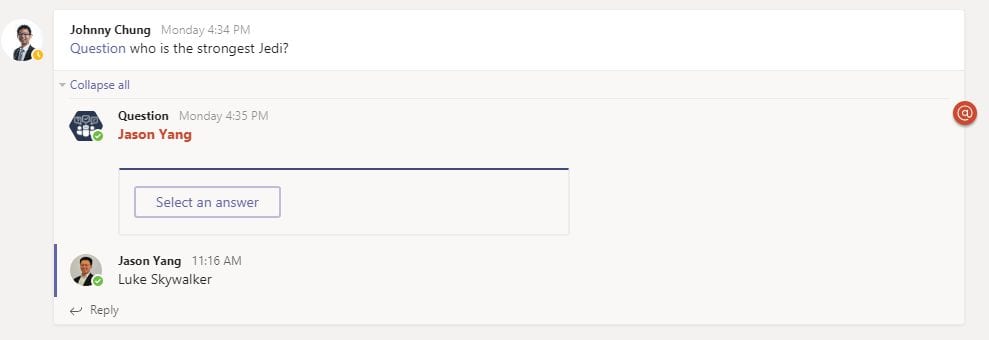

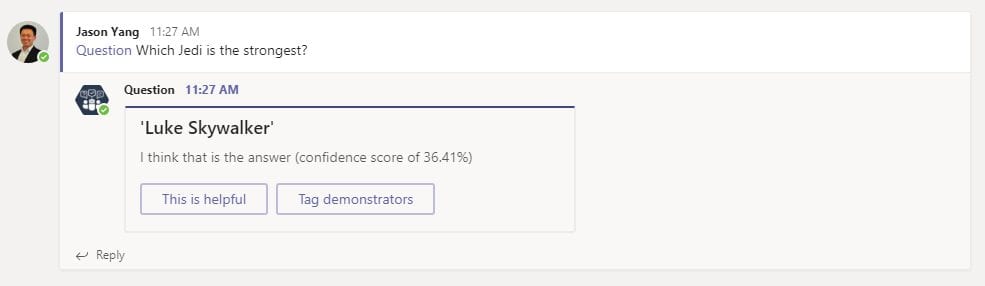

This sample conversation between a student (Johnny) and staff member (Jason) shows how it works.

Johnny asks a question and tags Jason. Jason provides the answer.

The bot learns the answer and attempts to provide a response the next time the question is asked. Note that the bot provides a confidence score, which is generated from the NLP algorithm and helps students determine the accuracy of its answer.

What’s next?

These are just some of the capabilities we’ve developed in phase two. We have also experimented with cutting-edge features including:

- Microsoft Stream transcript analysis and timestamp linking – lecture recordings are captured using Microsoft Stream. By identifying the keywords of a question and comparing to the Microsoft Stream transcript, we can provide students with time stamp links to the appropriate lecture to help answer their question.

- QR code question detection – Students had a habit of taking a photo of the question book and asking questions such as “how do I start this question?” or “how do I calculate the force at this point?”. These generic questions make it hard for the bot to recognise the question being referenced. By adding QR codes to the course booklet, any photos taken allow the bot can identify question in photos and provide a better contextual answer.

- Creating an upvote/downvote system so that the most helpful answers are presented to users first

We’re excited to bring you more news about these features and more as the project progresses. To learn more about Antares and its approach to building chatbots in the meantime, download our free guide to conversational UI, or phone 02 8275 8811.